Using shared memory

Overview

Teaching: 15 min

Exercises: 5 minQuestions

What is OpenMP?

How does it work?

Objectives

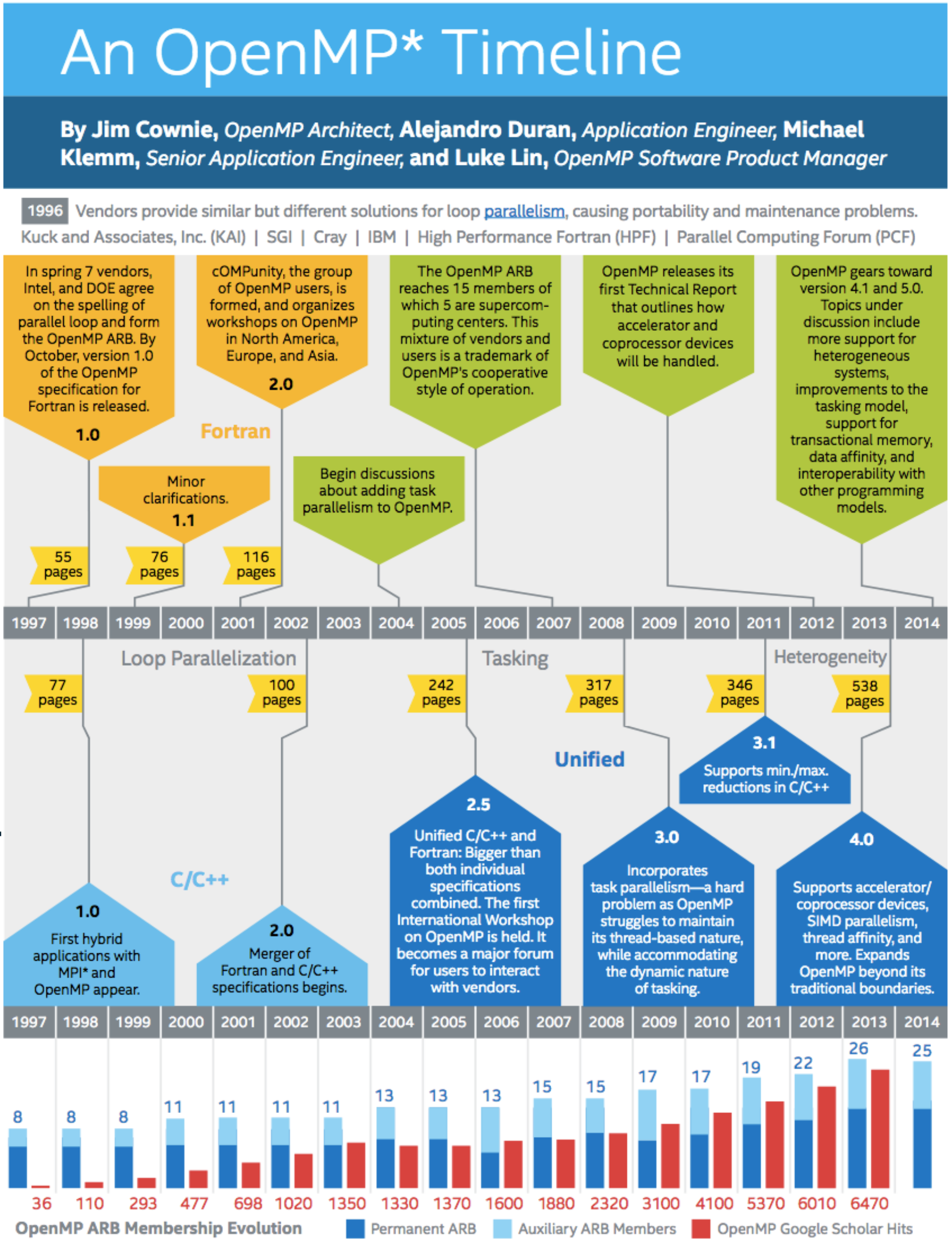

Obtain a general view of OpenMP history

Understand how OpenMP help parallelize programs

Brief history

You can consult the original in Intel’s Parallel Universe magazine.

In 2018 OpenMP 5.0 was released, current latest version is OpenMP 5.1 released November 2020.

What is OpenMP

The OpenMP Application Program Interface (OpenMP API) is a collection of compiler directives, library routines, and environment variables that collectively define parallelism in C, C++ and Fortran programs and is portable across architectures from different vendors. Compilers from numerous vendors support the OpenMP API. See http://www.openmp.org for info, specifications, and support.

OpenMP in Hawk

Different compilers can support different versions of OpenMP. You can check compatibility by extracting the value of the _OPENMP macro name that is defined to have the decimal value yyyymm where yyyy and mm are the year and month designations of the version of the OpenMP API that the implementation supports. For example, using GNU compilers in Hawk:

~$ module load compiler/gnu/9/2.0 ~$ echo | cpp -fopenmp -dM | grep -i open #define _OPENMP 201511Which indicates that GNU 9.2.0 compilers support OpenMP 4.5 (released on November 2015). Other possible versions are:

GCC version OpenMP version 4.8.5 3.1 5.5.0 4.0 6.4.0 4.5 7.3.0 4.5 8.1.0 4.5 9.2.0 4.5

OpenMP overview

-

OpenMP main strength is its relatively easiness to implement requiring minimal modifications to the source code that automates a lot of the parallelization work.

-

The way OpenMP shares data among parallel threads is by creating shared variables.

-

If unintended, data sharing can create race conditions. Typical symptom: change in program outcome as threads are scheduled differently.

-

Synchronization can help to control race conditions but is expensive and is better to change how data is accessed.

-

OpenMP is limited to shared memory since it cannot communicate across nodes like MPI.

How does it work?

Every code has serial and (hopefully) parallel sections. It is the job of the programmer to identify the latter and decide how best to implement parallelization. Using OpenMP this is achieved by using special directives (#pragma)s that mark sections of the code to be distributed among threads. There is a master thread and several slave threads. The latter execute the parallelized section of the code independently and report back to the master thread. When all threads have finished, the master can continue with the program execution. OpenMP directives allow programmers to specify:

- the parallel regions in a code

- how to parallelize loops

- variable scope

- thread synchronization

- distribution of work among threads

Fortran:

!$OMP PARALLEL DO PRIVATE(i)

DO i=1,n

PRINT *, "I am counter i = ", i

ENDDO

!$OMP END PARALLEL DO

C:

#pragma omp parallel for

for (i=0; i < N; i++)

printf("I am counter %d\n", i);

Further reading

https://www.openmp.org/resources/openmp-compilers-tools/

Very comprehensive tutorial:

https://hpc-tutorials.llnl.gov/openmp/

Key Points

OpenMP is an API that defines directives to parallelize programs written in Fortran, C and C++

OpenMP relies on directives called pragmas to define sections of code to work in parallel by distributing it on threads.