Working on a remote HPC system

Overview

Teaching: 25 min

Exercises: 10 minQuestions

What is an HPC system?

How does an HPC system work?

How do I log on to a remote HPC system?

Objectives

Connect to a remote HPC system.

Understand the general HPC system architecture.

What is an HPC system?

The words “cloud”, “cluster”, and “high-performance computing” are used a lot in different contexts and with varying degrees of correctness. So what do they mean exactly? And more importantly, how do we use them for our work?

The cloud is a generic term commonly used to refer to remote computing resources of any kind – that is, any computers that you use but are not right in front of you. Cloud can refer to machines serving websites, providing shared storage, providing webservices (such as e-mail or social media platforms), as well as more traditional “compute” resources. An HPC system on the other hand, is a term used to describe a network of computers. The computers in a cluster typically share a common purpose, and are used to accomplish tasks that might otherwise be too big for any one computer.

Logging in

Go ahead and log in to the Hawk cluster: cl1 at Cardiff University.

[user@laptop ~]$ ssh yourUsername@hawklogin.cf.ac.uk

If from Aberystwyth, try the Sunbird cluster instead: sl2.sunbird.supercomputingwales.ac.uk at Swansea University.

[user@laptop ~]$ ssh a.yourUsername@sunbird.swansea.ac.uk

Remember to replace yourUsername with the username supplied by the instructors. You will be asked for

your password. But watch out, the characters you type are not displayed on the screen.

How do I specify common

sshoptionsTo reduce issues with connecting it can be useful to fix the settings to be used. For example

sshwill try every key in your~/.sshdirectory, and can trigger the banning of your machine from accessing the system if you have more than 1 key.On a Mac/Linux laptop/desktop you can add settings to

$HOME/.ssh/configsuch as:Host hawk Hostname hawklogin.cf.ac.uk User c.username IdentityFile ~/.ssh/id_rsa-hawk ForwardX11 yes ForwardX11Trusted yesOn Windows depending on software these settings can be set in the menu settings.

Alternatively use

PreferredAuthentications passwordto force ssh to always use only a password. This may be needed if ssh keys are available on your own computer but not used for Hawk and can trigger the banning of your machine when attempts with the keys are made.Other option is

SetEnv LANG=Ccan be useful if a locale setting on your desktop is not supported on Hawk for some reason and causes text encoding issues.

You are logging in using a program known as the secure shell or ssh.

This establishes a temporary encrypted connection between your laptop and hawklogin.cf.ac.uk.

The word before the @ symbol, e.g. yourUsername here, is the user account name that Lola has access

permissions for on the cluster.

Where do I get this

sshfrom ?On Linux and/or macOS, the

sshcommand line utility is almost always pre-installed. Open a terminal and typessh --helpto check if that is the case.At the time of writing, the openssh support on Microsoft is still very recent. Alternatives to this are putty, bitvise SSH, mRemoteNG or MobaXterm. Download it, install it and open the GUI. The GUI asks for your user name and the destination address or IP of the computer you want to connect to. Once provided, you will be queried for your password just like in the example above.

Where are we?

Very often, many users are tempted to think of a high-performance computing installation as one

giant, magical machine. Sometimes, people will assume that the computer they’ve logged onto is the

entire computing cluster. So what’s really happening? What computer have we logged on to? The name

of the current computer we are logged onto can be checked with the hostname command. (You may also

notice that the current hostname is also part of our prompt!)

Hawk:

[yourUsername@cl1 ~]$ hostname

cl1

Sunbird:

[yourUsername@sl2(Sunbird) ~]$ hostname

sl2.sunbird.supercomputingwales.ac.uk

Nodes

Individual computers that compose a cluster are typically called nodes (although you will also hear people call them servers, computers and machines). On a cluster, there are different types of nodes for different types of tasks. The node where you are right now is called the head node, login node or submit node. A login node serves as an access point to the cluster. As a gateway, it is well suited for uploading and downloading files, setting up software, and running quick tests. It should never be used for doing actual work.

The real work on a cluster gets done by the worker (or compute) nodes. Worker nodes come in many shapes and sizes, but generally are dedicated to long or hard tasks that require a lot of computational resources.

All interaction with the worker nodes is handled by a specialized piece of software called a scheduler (the scheduler used in this lesson is called ). We’ll learn more about how to use the scheduler to submit jobs next, but for now, it can also tell us more information about the worker nodes.

For example, we can view all of the worker nodes with the sinfo command (Same for both sites).

[yourUsername@cl1 ~]$ sinfo

Hawk:

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

compute* up 3-00:00:00 133 alloc ccs[0001-0080,0082-0134]

compute* up 3-00:00:00 1 idle ccs0081

compute_amd up 3-00:00:00 1 fail* cca0057

compute_amd up 3-00:00:00 61 alloc cca[0001-0020,0023-0056,0058-0064]

compute_amd up 3-00:00:00 2 idle cca[0021-0022]

highmem up 3-00:00:00 5 mix ccs[1001,1008-1009,1023-1024]

highmem up 3-00:00:00 21 alloc ccs[1002-1007,1010-1022,1025-1026]

gpu up 2-00:00:00 3 mix ccs[2009,2012-2013]

gpu up 2-00:00:00 10 alloc ccs[2001-2008,2010-2011]

gpu_v100 up 2-00:00:00 4 mix ccs[2102,2106,2110-2111]

gpu_v100 up 2-00:00:00 11 alloc ccs[2101,2103-2105,2107-2109,2112-2115]

htc up 3-00:00:00 15 mix ccs[1009,1023-1024,2009,2012-2013,2106,2110-2111,3001,3003,3005,3008,3017-3018]

htc up 3-00:00:00 48 alloc ccs[1010-1022,1025-1026,2008,2010-2011,2103-2105,2107-2109,2112-2115,3002,3004,3006-3007,3009-3016,3019-3026]

dev up 1:00:00 2 alloc ccs[0135-0136]

Sunbird:

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

compute* up 3-00:00:00 2 fail* scs[0050,0097]

compute* up 3-00:00:00 2 drain* scs[0041,0056]

compute* up 3-00:00:00 17 down* scs[0011,0020,0023,0034,0048-0049,0055,0064,0086,0094,0110,0113,0115-0118,0121]

compute* up 3-00:00:00 4 drain scs[0030-0031,0039,0071]

compute* up 3-00:00:00 37 mix scs[0008-0009,0012-0013,0017-0018,0051-0054,0073-0085,0087-0093,0095-0096,0100,0102,0120,0122-0123]

compute* up 3-00:00:00 47 alloc scs[0001,0003-0007,0014-0016,0019,0021-0022,0024-0029,0032,0035-0038,0040,0042,0044-0047,0057,0059-0060,0063,0065-0067,0069-0070,0098,0103-0104,0106-0109,0112,0114]

compute* up 3-00:00:00 2 idle scs[0043,0111]

compute* up 3-00:00:00 12 down scs[0002,0010,0033,0058,0061-0062,0068,0072,0099,0101,0105,0119]

gpu up 2-00:00:00 1 mix scs2001

gpu up 2-00:00:00 3 idle scs[2002-2004]

development up 30:00 2 fail* scs[0050,0097]

development up 30:00 2 drain* scs[0041,0056]

development up 30:00 17 down* scs[0011,0020,0023,0034,0048-0049,0055,0064,0086,0094,0110,0113,0115-0118,0121]

development up 30:00 4 drain scs[0030-0031,0039,0071]

development up 30:00 37 mix scs[0008-0009,0012-0013,0017-0018,0051-0054,0073-0085,0087-0093,0095-0096,0100,0102,0120,0122-0123]

development up 30:00 47 alloc scs[0001,0003-0007,0014-0016,0019,0021-0022,0024-0029,0032,0035-0038,0040,0042,0044-0047,0057,0059-0060,0063,0065-0067,0069-0070,0098,0103-0104,0106-0109,0112,0114]

development up 30:00 2 idle scs[0043,0111]

development up 30:00 12 down scs[0002,0010,0033,0058,0061-0062,0068,0072,0099,0101,0105,0119]

accel_ai up 2-00:00:00 5 mix scs[2041-2045]

xaccel_ai up 2-00:00:00 5 mix scs[2041-2045]

xaccel_ai up 2-00:00:00 1 idle scs2046

accel_ai_dev up 2:00:00 5 mix scs[2041-2045]

accel_ai_mig up 12:00:00 1 idle scs2046

s_highmem_cdt up 3-00:00:00 2 idle scs[0151-0152]

s_compute_chem up 3-00:00:00 2 alloc scs[3001,3003]

s_compute_chem_rse up 1:00:00 2 alloc scs[3001,3003]

s_gpu_eng up 2-00:00:00 1 idle scs2021

s_highmem_opt up 3-00:00:00 1 idle scs0160

There are also specialized machines used for managing disk storage, user authentication, and other infrastructure-related tasks. Although we do not typically logon to or interact with these machines directly, they enable a number of key features like ensuring our user account and files are available throughout the HPC system.

Shared file systems

This is an important point to remember: files saved on one node (computer) are often available everywhere on the cluster!

What’s in a node?

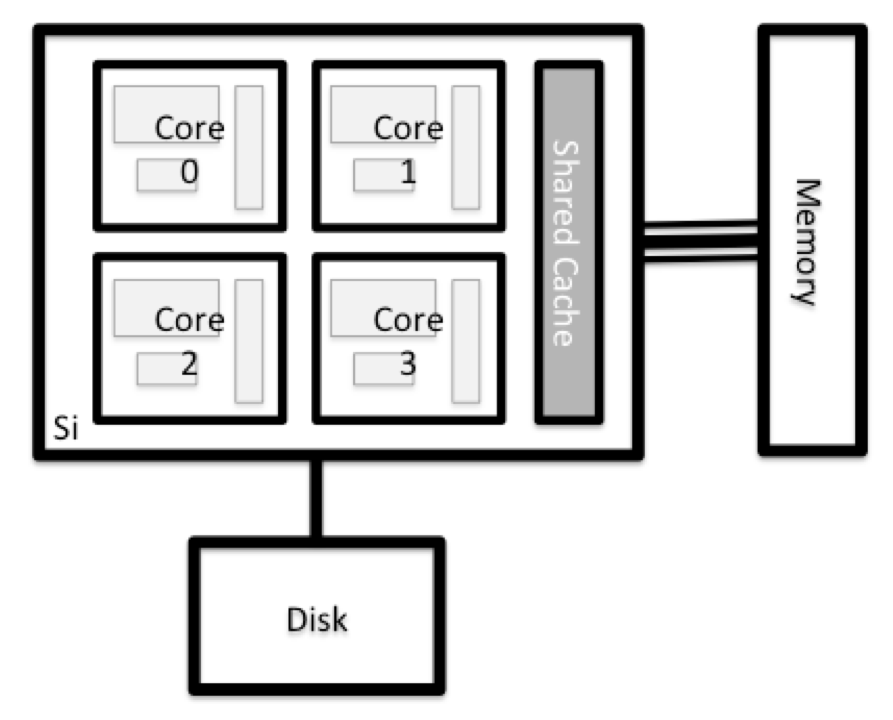

All of a HPC system’s nodes have the same components as your own laptop or desktop: CPUs (sometimes also called processors or cores), memory (or RAM), and disk space. CPUs are a computer’s tool for actually running programs and calculations. Information about a current task is stored in the computer’s memory. Disk refers to all storage that can be accessed like a file system. This is generally storage that can hold data permanently, i.e. data is still there even if the computer has been restarted.

Explore Your Computer

Try to find out the number of CPUs and amount of memory available on your personal computer.

Explore The Login Node (Same for both sites)

Now we’ll compare the size of your computer with the size of the login node: To see the number of processors, run:

[yourUsername@cl1 ~]$ nproc --allHow about memory? Try running:

[yourUsername@cl1 ~]$ free -m

Getting more information (Same for both sites)

You can get more detailed information on both the processors and memory by using different commands.

For more information on processors use

lscpu[yourUsername@cl1 ~]$ lscpuFor more information on memory you can look in the

/proc/meminfofile:[yourUsername@cl1 ~]$ cat /proc/meminfo

Explore a Worker Node

Finally, let’s look at the resources available on the worker nodes where your jobs will actually run. Try running this command to see the name, CPUs and memory available on the worker nodes (the instructors will give you the ID of the compute node to use):

Hawk:

[yourUsername@cl1 ~]$ sinfo -n ccs9002 -o "%n %c %m"Sunbird:

[yourUsername@sl2(Sunbird) ~]$ sinfo -n scs0122 -o "%n %c %m"

Compare Your Computer, the Login Node and the Worker Node

Compare your laptop’s number of processors and memory with the numbers you see on the cluster login node and worker node. Discuss the differences with your neighbor. What implications do you think the differences might have on running your research work on the different systems and nodes?

Units and Language

A computer’s memory and disk are measured in units called Bytes (one Byte is 8 bits). As today’s files and memory have grown to be large given historic standards, volumes are noted using the SI prefixes. So 1000 Bytes is a Kilobyte (kB), 1000 Kilobytes is a Megabyte, 1000 Megabytes is a Gigabyte etc.

History and common language have however mixed this notation with a different meaning. When people say “Kilobyte”, they mean 1024 Bytes instead. In that spirit, a Megabyte are 1024 Kilobytes. To address this ambiguity, the International System of Quantities standardizes the binary prefixes (with base of 1024) by the prefixes kibi, mibi, gibi, etc. For more details, see here

Differences Between Nodes

Many HPC clusters have a variety of nodes optimized for particular workloads. Some nodes may have larger amount of memory, or specialized resources such as Graphical Processing Units (GPUs).

With all of this in mind, we will now cover how to talk to the cluster’s scheduler, and use it to start running our scripts and programs!

Key Points

An HPC system is a set of networked machines.

HPC systems typically provides login nodes and a set of worker nodes.

The resources found on independent (worker) nodes can vary in volume and type (amount of RAM, processor architecture, availability of network mounted file systems, etc.).

Files saved on one node are available on all nodes.